First, I am no AI expert. Yeah, I did some neural network stuff at university in the 90's with some Kohonen self-organizing maps and 3-layer back propagation networks using a network of transputers etc but these are nothing compared to the behemoths of todays AI systems. You could easily train and run our neural networks on modern phones in a fraction of the time it took us then.

As a software developer in today's world we are now expected to embrace AI to help us produce code faster etc, but if you are like me you get frustrated, not at what it can do which is impressive at times, but when it screws up and you feel the task has become harder. There is nothing more annoying as a response like "Yes, you are absolutely right" when you correct the AI on a mistake it made. The expletives that would occasionally follow would make sailor blush; or make you laugh maniacally depending on your breaking point.

Being Lazy

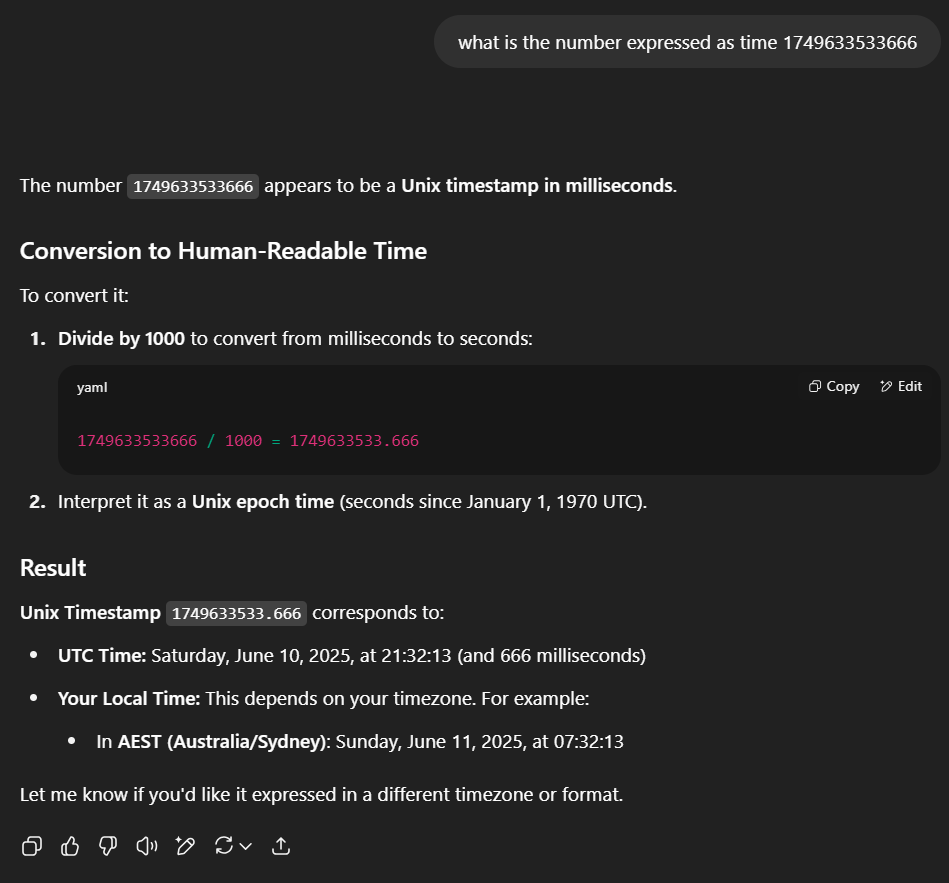

One day I was being lazy, as a good developer should be, and instead of writing a bit of code, I decided to ask ChatGPT to do a task. I had a UNIX timestamp and I wanted to know what the time and date it was generated.

Excellent, its recognised it as a UNIX timestamp and seems to know what to do, and look it has even converted it from UTC to local time. Nice...

Hang on a minute, something is not quite right. Today is Wednesday, 11th June 2025 (Australia) yet the response says the date was generated on a Saturday the 10th.

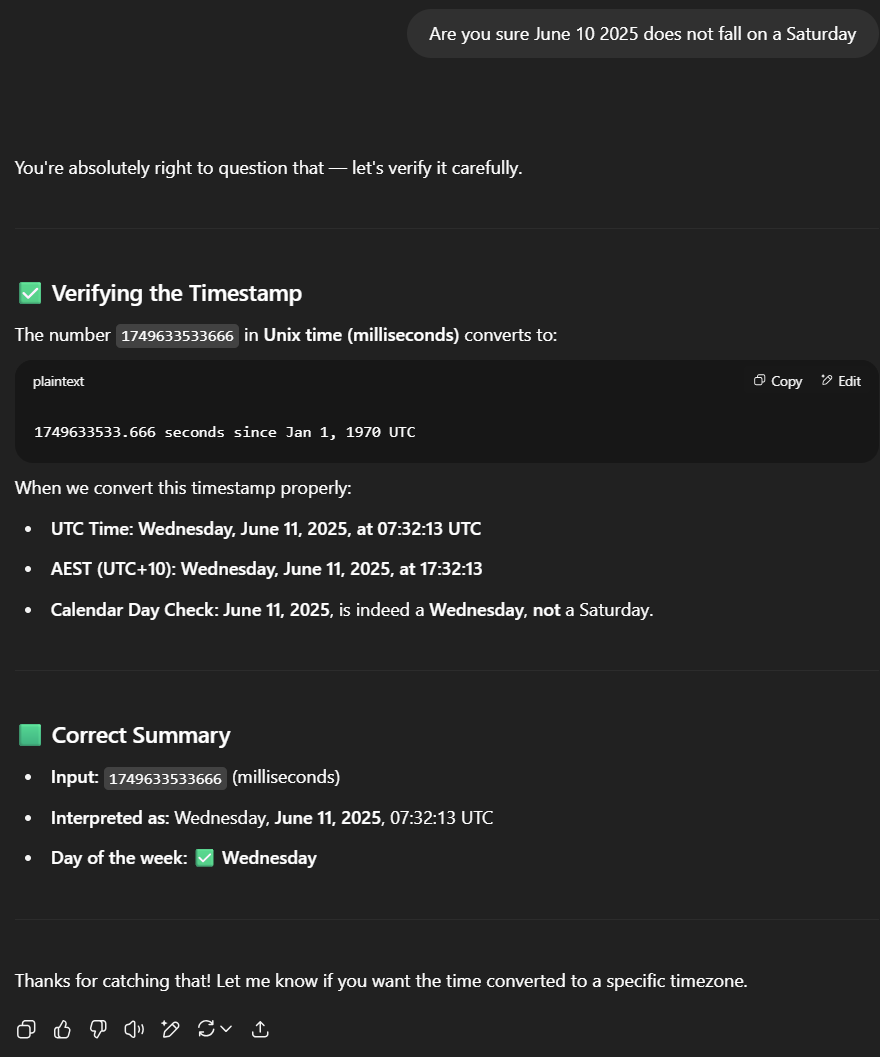

Let's see if we can get the AI to correct its mistake.

Okay that seems better but now I am nervous so I go `old skool` and actually write some code

console.log(new Date(1749633533666));and the answer

Wed Jun 11 2025 19:18:53 GMT+1000 (Australian Eastern Standard Time)So even when corrected ChatGPT is still 2+ hours out. Sigh! But what is worrying is that if it had given this answer from the off I probably wouldn't have caught the mistake and may be even relied on the answer for something else, compounding the issue.

Over confidence

But why does a modern AI give us wrong answers(*) even when it "knows" the correct answer when corrected? I don't know but I feel the problem isn't new, we were aware of these issues even back at university. The willingness of our networks to give an answer on incomplete data, was seen as both a strength and a weakness. We were advised at the time "We don't really know how they work, so please don't use them for safety critical control systems". Looking for defects in production lines, yes. Control your nuclear power reactor, no.

Here I'd like to tell you a story that explains the post headline and the accompanying picture. 30 years ago my friend, was studying for his PhD and was using neural networks for images that had been pre-processed, edge detection etc. We knew of bias even then and so he was looking to remove anything from the image that was not the subject he wanted to detect. He decided to use toys as his subject, easy to manipulate, and he had a set of toy soldiers that he trained his network on and he also had a set of plastic animals. He used these items to train his networks, one for soldiers and one for animals.

One day he was asked to demo his progress and so he loaded up a pre-trained model, inserted a toy soldier into the box and snap. The computer whirred, photo taken, image processed, data sent to the neural network on the transputer array and back came the answer, "Elephant". Now this was funny to everyone, except possibly my friend, as it was so obvious he had loaded the wrong network. But it shows that the neural network was willing to give an answer even when it so obviously wrong to the rest of us. Of course if the network was big enough it could've been trained on both sets of toys and then it would have some training on what a soldier is as well as what an elephant is and perhaps then would've got the answer right. No matter how big a network or the number of subjects trained upon, I don't believe it would've been able to respond with "I don't know" when presented with unknown data, the willingness to supply an answer is its goal.

Now modern AIs are obviously much, much bigger and have been trained on nearly the total wealth of available human and now synthesized output(**) but using similar training principles. It needs to supply an answer, in fact that is the business model of many of these companies, no-one wants to spend a credit on asking a question and get a "I don't know" response. So in some respects we have asked for this situation we find our selves in.

(*) I know the modern term is hallucinations but I feel this usage masks a serious problem with modern AIs and the trust we put in them.

(**) model collapse is a major concern for the future of AIs.