The other day we encountered a very unusual scenario with one of our production systems, that even after many years of working with AWS Lambdas took us by surprise; well at least me.

Background

We updated the environment variables of a number of Lambdas in our stack due to a deprecation of systems that now had newer replacements. We were really proud with ourselves that we had done this well in advance of the old systems being switched off and the platform worked as expected with the new configuration; we patted ourselves on the back and moved on. Then the legacy system was switched off and one (of many) Lambdas started to bleat. Looking at the logs we could see it was still trying to talk to the old system and yet we could see the new configuration for that Lambda in the AWS Console. What was going on!?

Investigation

As mentioned we could see the new configuration in the AWS Console and yet the Lambda was still using the older configuration. We did the usual knee-jerk actions such as force a redeploy; we weren't hopeful as the Application stack that this Lambda belongs to is often deployed several times a week due to npm and terraform module updates, but hey it is worth a try. We could see the the new stack was deployed as one of our environment variables was the latest build number and that had changed and yet the important environment variable to direct to the new infrastructure was still not being picked up. A review of the code also led to more head scratching as the Lambda was actually very trivial; validate some incoming data and ping the newer service with the result, it used only a couple of libraries for logging purposes so it should really be idiot proof. If any Lambda in this stack was going to cause us issues this one was at the bottom of the list.

It turns out that simplicity of the lambda was its downfall. Despite all the deploys of the stack the generated output code of that particular Lambda never actually changed i.e. the JavaScript was identical and had remained the same for at least the past month. It would appear that a physical difference of uploaded code is needed for a Lambda to pick up the new configuration from the environment variables. So a small tweak to the code, an extra logging line, and after the next deploy we could see the new config was now being used. Crisis averted!

Solution/Workaround

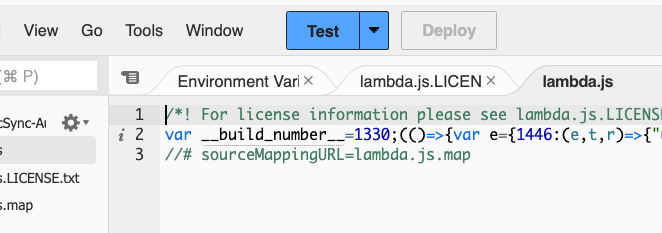

So that we don't run into this situation again, what we need to do was make sure the code changes on every deploy and thus force a refresh of the configuration via the supplied environment variables [these aren't secrets by the way]. We initially thought about generating a file with a build number or date and then pulling that in to each lambda but that felt messy. So then our thoughts turned to our build system and we identified a webpack plugin called BannerPlugin that can be used to inject snippets into the header or footer of the output file i.e. our Lambda code. What we ended up doing was something like the following in our webpack configuration:

import { BannerPlugin, Configuration } from 'webpack';

const buildNumber = process.env.BUILD_NUMBER ?? '0';

const config: Configuration = {

...,

plugins: [

new BannerPlugin({

banner: `var __build_number__ = ${buildNumber};`,

raw: true,

}),

...

],

}

modules.exports = config;Now, each Lambda we produce using this config is stamped with the build number and thus making each incarnation we deploy unique.

Note: If you don't have a build number to hand you could just use Date.now() or some other mechanism to generate a unique enough value.

Feedback

As always, your thoughts are always appreciated.

Photo by Brett Jordan on Unsplash